fast.ai, week 2

18 Aug 2017

. category:

Notes

.

Comments

Week 2 of fast.ai is officially under my belt! Judging by my completion date for Week 1, Week 2 took me a little longer than a week, but I’m glad I found and spent the time necessary outside of work to understand this material.

Stanford CNN Pages1

I have now read some of the clearest technical writing I’ve seen thus far on deep learning: the Stanford CS231n (or the Stanford CNN pages, as the course likes to term them) course material on stochastic gradient descent (SGD), backpropagation, and neural network architectures2. At first, I questioned why this math-heavy material was even assigned, considering this course’s emphasis on practicality. But just keeping that practical emphasis in mind helped relax my expectations so that I could instead focus on building intuition. Understanding every single equation wasn’t the goal, and the reading turned out to be quite enjoyable.

The teaching staff agrees with this intuition-based approach:

There are some fantastic readings that cover similar material to this week’s course. I’ve added them to the lesson wiki page. In particular, the Stanford CNN course is some of the best technical writing I’ve seen, and is totally up to date. If you have 8 hours to spend this week, spending half that time reading the Stanford notes would be an excellent use of your time this week. — Jeremy Howard on the forums

Definitely more about getting intuition! Also, don’t let trying to understand all the details bog you down, because the course returns to concepts several times, with progressively more detail as time goes on. — Rachel Thomas on the forums

Jupyter Notebooks: Reading vs Running

This week, the utility of learning in an interactive environment like Jupyter really hit home for me. The course has provided an opinionated outline on how to spend the 10 hours/week recommended to thoroughly understand the material3. For Week 1, I stuck close to the outline, referencing it throughout the week. By Week 2, I repeated the steps from memory - but instead of running the notebooks interactively like I should have, I attempted to just read through the notebooks on GitHub (I was also partially trying to minimize the amount of time my AWS P2 instance needed to be running, since the GPUs cost $0.90/hour).

As I read through the notebooks though, I would naturally have questions about a line of code, about inputs and outputs to functions, about what functions were doing internally - but I didn’t have a direct way of answering these questions for myself. Google and wiki.fast.ai searches were only taking me so far.

When I finally remembered that I should have been running the notebooks myself - it was honestly like a breath of fresh air. Suddenly, I could create a new cell and ??vgg.finetune whenever I needed to recall what exactly that function was doing. Or I could mess with prior lines to see how outputs would change further down. Running the notebook allows me to experiment as I learn - and I won’t be forgetting that step again.

Drawbacks of Top-Down Learning

So I finally got to a point where I felt comfortable with the week’s readings and accompanying notebooks to create my own notebook from scratch. Instead of using the standard Cats vs Dogs dataset, I wanted to continue what I had tried in Week 1 with the slightly-more-difficult StateFarm Distracted Driver Detection dataset4.

Fast forward to finally getting this model predicting… and alas, it sucked. A lot. The model was barely doing better than random guessing at the 10 categories of distracted driving. It didn’t make any sense to me because everything I did should have at least replicated my Week 1 results - I was just relying less on the course’s helper methods, implementing those pieces myself in raw Keras code.

So I spent several hours debugging5, and I was getting pretty frustrated. There were no obvious mistakes being made, as far as I could tell. And that’s when I noticed that despite using the optimization algorithm provided in lesson2.ipynb (RMSprop(lr=0.1)), lesson1.ipynb had been using another optimizer under the hood (Adam(lr=0.001)). This didn’t strike me as important, but feeling pretty desperate, I made the change in my notebook just to see.

Lo and behold: Adam gave me the boost I needed to return to Week 1 result accuracy levels.

I wrote the following in my notebook after seeing this surprising boost:

This significant performance improvement is pretty frustrating, as differences between the various optimizers used for gradient descent have not been covered yet. I was using RMSprop like the

lesson2.ipynbnotebook from the course materials, on the faith that it is a good default. Perhaps my mistake was assuming that because it’s a good default for cats vs dogs, it translates to this competition… but there’s a lot of assuming going on so early in this course right now, so how to choose which assumptions to question? Feels like a big downside of top-down (/whole game/breadth-first) learning.

Calmer-me now understands that 1) a huge part of this course is left to experimentation and building your own intuition (as positively discussed earlier); and 2) I went slightly off-script by selecting a more difficult dataset while still applying techniques possibly meant for the easier dataset. However, I do still agree with frustrated-me about this being a noteworthy con for this teaching philosophy.

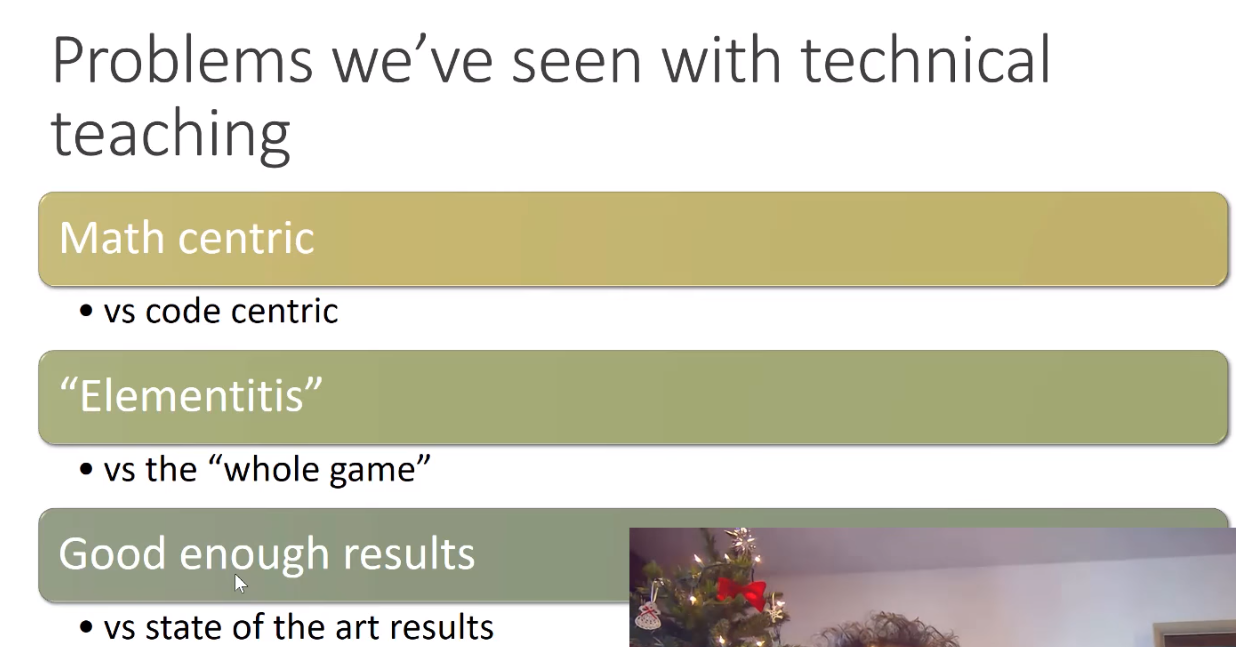

— Screenshot from Lesson 1 Overview that summarizes this course’s teaching philosophy and how they have chosen to reconcile with problems in traditional technical teaching

— Screenshot from Lesson 1 Overview that summarizes this course’s teaching philosophy and how they have chosen to reconcile with problems in traditional technical teaching

My “whole game” issue is: when is a student supposed to trust that some detail will be covered later on versus dig in and maybe even fret over a lack of understanding?6

Footnotes

-

CNN stands for “Convolutional Neural Network” - a type of neural network widely used for image recognition tasks ↩

-

Here are the specific readings assigned by the course: Optimization: Stochastic Gradient Descent – Backpropagation, Intuitions – Neural Networks Part I: Setting up the Architecture ↩

-

The breakdown is roughly as follows: 1) Watch the ~2 hours of lecture video to gain the high-level ideas; 2) Read the wiki and recommended readings, then try the lesson notebooks out for yourself (~5 hours); 3 Watch the lecture video again to get more detail. ↩

-

See my record of failed attempts under Iteration Record ↩

-

I still don’t know why Adam made such a huge difference over RMSProp. I’m going to trust this will be covered more in further weeks - although the trust comes with more hesitation now. ↩